How to conduct Product Experiments: A Guide to Data-Driven Decision Making

Experiments in Product Management are about finding out what needs to change in the product to increase acquisition, engagement, retention and revenue (aka create value).

Experiments in Product Management are about finding out what needs to change in the product to increase acquisition, engagement, retention and revenue (aka create value).

Or how I usually say it when I teach the ICAgile Certified Product Management course, your job as a product manager is to ensure “Your product makes customers want to join, stay, pay and refer” to other customers. That’s value creation for the business and customer.

After all, the Product is a Vehicle for Value.

If it’s not creating value, find out what’s missing!

Write a hypothesis, design an experiment, learn quickly and improve your product.

Nothing is set in stone in product management - the external market changes (political, economic, social, technology, environmental and legal), new competitors emerge, old competitors exit, new business models appear, suppliers change and above all what customers were satisfied with ten years ago they’re no longer satisfied with. They demand more. The flux of change is constant.

So when copying is not an option (look I know you’re tempted to, but stop! There is no data that what your competitor is doing is profitable or even works or would even work for you. Beside, who wants to be an also ran. Be original and have a differentiator!), start experimenting!

Experiments

What experiment you choose to do, depends on the question you need answered and the resources you have. Depending on your organisational context, experiments may be called a Proof of Concept (POC), Minimal Viable Product (MVP) or something else. They are never the final product!

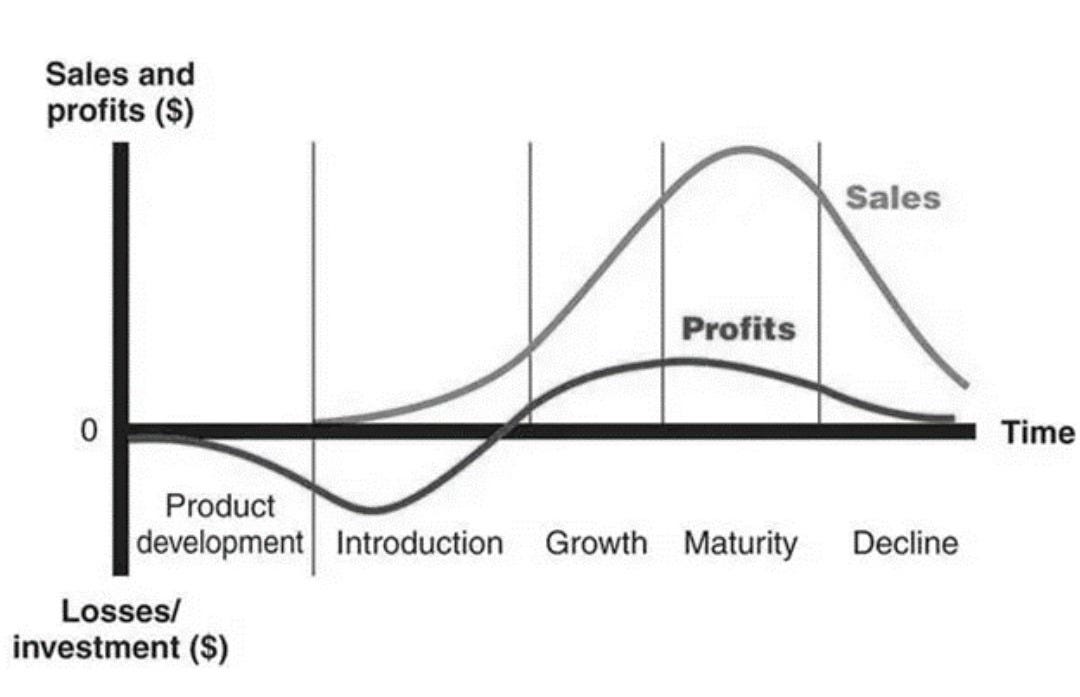

At different stages of your product lifecycle, there will be different questions and therefore experiments that you’ll want to run.

Before you build - make sure you validate whether it’s a problem worth solving and someone will be willing to pay. Don’t, in your wisdom and decades of experience, decide as a strong Tech professional, you’ll copy the world leaders in the field, their tech, their approach. Sometimes that’s the burden of industry knowledge. If there was already a “best” product in the market, then there would not be unsatisfied customers willing to change or try your product. But we all know there are.

I’m in a constant passive search for alternatives to a lot of the products I use day to day. Waiting for the deepest paper cut to get me to actively start searching and switch. More about this in

Using the Wheel of Progress to Understand Customer Jobs: A Guide for Growth and Retention

Want to Increase Customer Acquisition and Retention? Understand Jobs to Be Done.

The kind of experiments you’ll need here are qualitative - think Surveys and Interviews.

Once your product is introduced in the market - you’ll start gathering real data. Is what you expected in the business case, what you learned before launch, what is happening live? If not, time to understand the data and adjust.

Experiments are about understanding the customer journey from how they discover you to what they do while in your product and every flow they could experience. Seek to identify and eliminate friction. They are the paper cuts or the leaky buckets where engagement drops off, usage declines and churn is inevitable. Value is eroded. The customers didn’t get what they had hoped for with your product experience. At the same time, identifying opportunities in the customer journey where the experience brings users joy, is one that needs to be amplified. Design a growth experiment here using PLG approaches I talk about here. Think, easy ways for users to tell a friend, post on tik tok, upgrade or unlock more experiences or just post a review on the app store. Make it easy, work with their positive emotion when you make the ask.

Why Design Experiments?

You run an experiment when you hit a roadblock in achieving key business goals. Maybe:

Conversion is low – You need to optimise onboarding, checkout, or pricing.

Revenue isn’t growing fast enough – Time to test new pricing, upsells, or monetisation models.

Retention is slipping – What user engagement features, loyalty programs, or reactivation strategies might turn things around?

Engagement isn’t where you want it – Could better content, notifications, or feature improvements make a difference?

Acquisition needs a boost – Which marketing channels, messaging, or referral incentives will bring more users in?

Instead of guessing, experiments validate hypotheses with real user behaviour. No more gut-feel decisions that waste time and resources. Instead, you get data-driven insights that reduce risk and drive impact.

Because in Product, your one job is creating value—for customers and the business. Every experiment is a step toward that.

What is a Product Experiment?

A product experiment is a structured way to test how a change impacts user behaviour and business outcomes. It’s not guesswork—it’s the scientific method in action:

Spot a problem or opportunity – Something’s not working, or there’s a chance to improve.

Form a hypothesis – “If we do X, we expect Y to happen.”

Design the experiment – What will you test? How will you measure success?

Run the test – Get real user data, not opinions.

Analyse results – Did the change make things better, worse, or no different?

Decide what’s next – Double down, tweak, or scrap it.

Experiments turn assumptions into evidence-based insights, so you invest in changes that actually improve the product—not just ones that sound good in a meeting.

How to write a Hypothesis?

A hypothesis is a well-informed guess about the relationship between two or more variables. In Product, it’s your best prediction of what change will drive impact.

A good hypothesis is:

Rooted in Research & Observation – It’s not just a gut feeling. Use existing data, customer feedback, and industry trends to make sure your hypothesis is grounded in reality.

Clear & Specific – A hypothesis should leave no room for confusion. Define exactly what will change and what impact you expect.

Testable – If you can’t measure it, you can’t validate it. Ensure both the independent variable (the change you make) and the dependent variable (the outcome you measure) are trackable.

Falsifiable – A hypothesis should be possible to disprove. If the results don’t support it, you know to pivot.

Expressed as a Relationship – Use the "If X, then Y" format to define the cause-and-effect relationship.

Bound by Duration & Pass/Fail Criteria – Set a time limit and define what success or failure looks like.

Example: Testing a Hypothesis for a Language Learning App

Problem: User engagement has been dropping significantly two weeks after joining. Feedback suggests learners struggle with confidence in real-world conversations, which impacts motivation.

Improvement Idea: Introduce an AI-powered conversation partner to simulate real-life language practice and improve retention.

Writing the Hypothesis

Objective → Increase user engagement and lesson completion rates.

Expected Change → Introduce an AI-powered conversation feature that allows users to practice real-world dialogues.

Predicted Outcome → Users will spend more time in the app and complete more lessons.

Measurable Metrics →

Primary Metric: Weekly Active Users (WAU)

Secondary Metrics: Session length, lesson completion rate, and percentage of users returning after two weeks

Hypothesis Statement: "If we introduce an AI-powered conversation feature in our language learning app, then we will see an increase in weekly active users (WAU), session length, and lesson completion rates over the next six weeks."

Experiment Design

Hypothesis Statement: "If we introduce an AI-powered conversation feature in our language learning app, then we will see an increase in weekly active users (WAU), session length, and lesson completion rates over the next six weeks."

Rationale: The feature will provide a more engaging and confidence-building experience, increasing usage consistency.

Testing the Hypothesis

Implementation: Roll out the AI conversation feature to 50% of new users (A/B test).

Duration: Run the test for six weeks.

Pass/Fail Criteria:

Pass: If WAU increases by at least 10% and lesson completion by 15%, the feature is considered successful.

Fail: If engagement metrics stay flat or decline, the feature is not adding value.

Early Stop Criteria: If WAU drops by more than 5% in the first three weeks, stop the test early to avoid further user disengagement.

Outcome Analysis: Compare WAU, session length, and lesson completion rates between test and control groups. If statistically significant improvements are observed, move forward with a full rollout.

Having taught hundreds of people how to design an experiment (session 6 of the ICAgile Product Management course), I’ve found that not many document and think through what the expected success metric will be, when to stop the experiment early or reduce the risk by having control groups to compare against.

Being clear on what you expect will happen, how long the experiment will run and when to stop the experiment early is how you’ll get the support to run experiments in your organisation.

Okay! Let’s talk about the different type of experiments by the stage in the Product Lifecycle.

Product Experiments throughout the Product Lifecycle

1. Pre-Introduction Stage: Validating Demand & Problem-Solution Fit

Before you build anything, validate that the problem is worth solving. Find out who your ideal customer is, how they’re solving this problem today, what they like and don’t like, and what kind of solution they’d actually use. If there’s no demand, stop. If there is, find your earliest adopters and work towards product-market fit before investing heavily in development.

Ways to Validate Demand & Problem-Solution Fit

1. Surveys

Surveys help you collect broad, quantitative insights on market demand, customer pain points, and preferences.

💡 How to use it:

Start with a hypothesis about your target audience.

Go where they are—Facebook groups, Reddit, online forums, or communities.

Observe before posting. What patterns do you see? Can you contribute value to the discussion?

If posting, check if permission is needed, clearly explain why you're conducting the survey, and engage in responses.

✅ Advantages: Quick way to gather data at scale. Statistically significant insights.

❌ Disadvantages: Lacks depth—won’t reveal why people behave the way they do.

⏳ Timeframe: 1-2 weeks.

💰 Budget: Low (survey tools and possible incentives).

2. Interviews

One-on-one conversations with potential users to get deep qualitative insights into their experiences.

💡 How to use it:

Ask open-ended questions. Let users tell their story. Summon your inner Bob Moesta with your questions and active listening.

I like to run interviews alongside surveys. End every survey with “Can I contact you if we have more questions?”

Listen actively. The most valuable insights often come when the conversation takes an unexpected turn.

✅ Advantages: Rich, detailed insights with the flexibility to explore deeper.

❌ Disadvantages: Time-intensive. Bias can creep in based on how questions are asked.

⏳ Timeframe: 2-4 weeks.

💰 Budget: Low to medium (depends on incentives).

3. Sketches & Storyboards

Quick visual representations of user journeys and product interactions.

💡 How to use it:

Sketch out the experience. Think through key moments a user will have with your product.

Share with potential customers to validate assumptions before writing a single line of code.

✅ Advantages: Easy to iterate, low-cost.

❌ Disadvantages: Users must interpret it—real-world data is missing.

⏳ Timeframe: 1-2 weeks.

💰 Budget: Minimal (just time investment).

4. Paper Prototyping

A low-fidelity, hand-drawn mock-up of your product’s interface.

💡 How to use it:

Sketch out screen-by-screen flows on paper or butcher’s paper.

Show it to users and walk them through how it works.

I used this method extensively at Virgin, validating new product ideas directly with customers in stores.

✅ Advantages: Fast, cheap, and great for early feedback.

❌ Disadvantages: Can’t test real interactivity.

⏳ Timeframe: 1-2 weeks.

💰 Budget: Minimal (paper and markers).

5. Landing Page Smoke Test

A simple landing page that presents your product concept and measures sign-up rates as an indicator of demand.

💡 How to use it:

Before launch - this is about validating the size of the demand for the product idea. This can then be used as a way to capture users as part of a waitlist. Note, not all users on your waitlist will convert.

After launch - you could use this to consider how to grow the product revenue through exposing existing customers to a landing page for a feature test. As they’re already

✅ Advantages: Real-world data before building.

❌ Disadvantages: Doesn’t guarantee long-term adoption.

⏳ Timeframe: 2-4 weeks.

💰 Budget: Low (landing page + basic ads).

6. Waitlists

Capturing sign-ups before launch to measure interest and build anticipation.

💡 When to use it:

If you want early traction and demand validation.

If you plan to create a sense of exclusivity before release.

✅ Advantages: Creates urgency, builds early traction.

❌ Disadvantages: Not all sign-ups will convert to paying customers.

⏳ Timeframe: Ongoing.

💰 Budget: Low (email capture tool).

7. Crowdfunding

A way to raise funds and validate demand through platforms like Kickstarter or Indiegogo.

💡 When to use it:

If you need capital before development. And the best place to get this capital is from potential and future customers.

Crowdfunding allows you to test willingness to pay and market interest.

✅ Advantages: Funds development while proving demand.

❌ Disadvantages: Success depends on strong marketing, and outcomes are unpredictable.

⏳ Timeframe: Varies.

💰 Budget: Medium (ad spend, campaign video, marketing efforts).

8. Pre-Orders

Selling your product before it’s built to test demand and secure revenue upfront.

💡 When to use it:

This is the best test of willingness to pay as you’re asking customers to pre order and pay for the product before it’s ready.

It’s one of the strongest signals of purchase intent.

Plus, it’s provides early sales to fund production.

✅ Advantages: Cash flow before launch, clear demand signals.

❌ Disadvantages: If development is delayed, refunds and customer frustration become a risk.

⏳ Timeframe: Ongoing.

💰 Budget: Varies (payment processing, website setup).

Before you build, validate. Assumptions aren’t facts until you have real data from real users. The right validation method depends on what you need to learn—whether it’s broad market demand (surveys, smoke tests) or deep insights into behaviour (interviews, prototypes).

Build smart. Build based on real signals.

2. Introduction Stage: Acquisition, Activation, Engagement & Retention

The Introduction Stage in the Product Lifecycle is when your product is live, but adoption is still growing. It starts with those innovators and early adopters but you need to cross that chasm into early majority if you want this product to move across into the Growth Stage.

You’re moving from validation to traction—figuring out what works, what doesn’t, and how to optimise acquisition, engagement, and retention.

But the product is now live. That means, you have real data (not just assumptions). You have data on the current acquisition rate, activation rate, engagement rate, where customers are coming from, how long they engage and what they think through customer feedback.

Use this to inform your experiments!

Here are a few experiments you could run during this stage:

1. Acquisition & Activation Experiments

Your goal: Get more users in the door and make sure they start using your product.

1. A/B Testing Landing Pages

💡 What to test: Messaging, call to action (CTAs), images, videos, form fields, sign-up flow.

✅ Why: Different messaging resonates with different audiences—find what converts best.

📊 Success Metrics: increasing Sign-up rate, conversion rate.

⏳ Timeframe: 2-4 weeks.

2. Optimising Sign-Up Flow

💡 What to test: Single-step vs multi-step sign-up, social logins, email-only vs password-required.

✅ Why: Friction in sign-up can kill conversion.

📊 Success Metrics: Sign-up completion rate, drop-off rate.

⏳ Timeframe: 2-4 weeks.

3. Referral Incentive Testing

💡 What to test: Incentive types (discounts, credits, exclusive access), invite mechanisms.

✅ Why: Referrals drive organic growth, but the right incentive matters. Base it on what you know about what motivates your customer!

📊 Success Metrics: Referral conversion rate, CAC (cost per acquired customer).

⏳ Timeframe: 4-6 weeks.

4. Ad & Channel Testing

💡 What to test: Paid ads (Google, Facebook, TikTok), influencer partnerships, SEO content.

✅ Why: You need to find the most cost-effective way to acquire users. Go where your users are.

📊 Success Metrics: Customer Acquisition Cost (CAC), click-through rate (CTR), conversion rate.

⏳ Timeframe: 4-6 weeks.

2. Engagement Experiments

Your goal: Get users to actively use your product, not just sign up.

1. Onboarding Flow Optimisation

💡 What to test: Interactive tutorials, tooltips, email sequences, personalised onboarding.

✅ Why: Users who don’t see value quickly churn.

📊 Success Metrics: Activation rate, feature adoption rate.

⏳ Timeframe: 4 weeks.

2. Gamification & Streaks

💡 What to test: Streaks, badges, progress bars, rewards for consistent use.

✅ Why: Dopamine drives engagement—keep users coming back. Understand your users and what motivates them to increase engagement. Think behavioural science or even BJ Fogg’s MAP model (motivation, ability and prompt)

📊 Success Metrics: Daily Active Users (DAU), session length, retention.

⏳ Timeframe: 4-6 weeks.

3. In-App Feature Discovery

💡 What to test: Product tours, feature highlight pop-ups, nudges to try advanced features.

✅ Why: Users won’t engage if they don’t know what’s available.

📊 Success Metrics: Feature adoption rate, time to first meaningful action.

⏳ Timeframe: 4 weeks.

3. Retention & Monetisation Experiments

Your goal: Keep users coming back and start generating revenue.

1. Subscription & Pricing Model Tests

💡 What to test: Free trial vs freemium, monthly vs annual pricing, different price points.

✅ Why: Small pricing tweaks can massively impact revenue.

📊 Success Metrics: Conversion to paid, churn rate.

⏳ Timeframe: 6-8 weeks.

2. Reactivation Campaigns

💡 What to test: Win-back emails, push notifications, limited-time offers for inactive users.

✅ Why: Bringing back a churned user is cheaper than acquiring a new one.

📊 Success Metrics: Re-engagement rate, open rate, click-through rate.

⏳ Timeframe: 4 weeks.

3. Personalisation & AI Recommendations

💡 What to test: Content recommendations, suggested features based on user behaviour.

✅ Why: Tailored experiences boost retention.

📊 Success Metrics: Engagement time, retention rate.

⏳ Timeframe: 6 weeks.

The Introduction Stage is about figuring out what works and doubling down. Your experiments should focus on reducing friction, increasing engagement, and driving monetisation.

If you’re not testing, you’re guessing. Run experiments, learn fast, and optimise for growth.

3. Growth Stage: Experiments to Scale, Retain and Monetise

At the Growth Stage, your product has traction, users are engaging, and now it’s time to scale efficiently, increase retention, and expand revenue. Your experiments should focus on optimising existing success, expanding the user base, and increasing lifetime value.

1. Acquisition Experiments: Expanding Your Reach

Your goal: Find new, cost-effective ways to attract more users and grow your market share.

1. Scaling Paid Acquisition (CAC vs LTV Analysis)

💡 What to test: New ad creatives, audiences, and bidding strategies.

✅ Why: Ads that worked in the early stage may not scale profitably—optimise for better CAC (Customer Acquisition Cost) vs LTV (Lifetime Value).

📊 Success Metrics: CAC, LTV, ROAS (Return on Ad Spend).

⏳ Timeframe: 4-6 weeks.

2. SEO & Content Expansion

💡 What to test: Long-form content, video content, new keyword clusters.

✅ Why: Organic growth is cheaper than paid acquisition and compounds over time.

📊 Success Metrics: Organic traffic, keyword ranking, sign-ups from organic channels.

⏳ Timeframe: 8-12 weeks (SEO takes longer to see results).

3. Partnership & Affiliate Marketing

💡 What to test: Influencer partnerships, co-marketing campaigns, affiliate programs.

✅ Why: Leveraging other brands’ audiences accelerates reach.

📊 Success Metrics: Partner-driven sign-ups, conversion rate.

⏳ Timeframe: 6-8 weeks.

4. Referral Optimisation & Virality Loops

💡 What to test: Incentive types (cash vs credits vs free features), invite mechanics, in-product referral nudges.

✅ Why: Word-of-mouth is the cheapest growth channel, but incentives must be compelling.

📊 Success Metrics: Referral rate, invite conversion rate, CAC reduction.

⏳ Timeframe: 4-6 weeks.

2. Activation & Onboarding Experiments

Your goal: Get new users to experience value faster.

1. Personalised Onboarding Flows

💡 What to test:

Custom onboarding based on user type (e.g., beginner vs experienced user).

AI-driven recommendations for first steps.

Interactive onboarding checklists vs guided walkthroughs.

✅ Why: Users who see value quickly are more likely to stay.

📊 Success Metrics: Time to first value, onboarding completion rate, activation rate.

⏳ Timeframe: 4-6 weeks.

2. Reducing Onboarding Friction

💡 What to test:

Social logins vs email-based sign-ups.

Multi-step vs single-page onboarding.

Allowing users to skip steps and explore freely.

✅ Why: Friction at sign-up means lost users.

📊 Success Metrics: Sign-up completion rate, activation rate, drop-off rate.

⏳ Timeframe: 4 weeks.

3. Engagement & Retention Experiments: Keeping Users Active

Your goal: Increase product stickiness and daily usage.

1. Gamification & Progress Systems

💡 What to test:

Streaks, leaderboards, badges, and progress bars.

Rewarding milestone completions.

Social competition (challenges, shared achievements).

✅ Why: Users return more often when progress is visible and rewarded.

📊 Success Metrics: DAU (Daily Active Users), session length, retention rate.

⏳ Timeframe: 6-8 weeks.

2. Habit-Forming Nudges (BJ Fogg’s Model)

💡 What to test:

Push notifications based on user behaviour (e.g., “You’re one step away from unlocking X”).

Email reminders tailored to past activity.

In-app nudges for underused features.

✅ Why: Users need reminders to build habits, but not spammy ones.

📊 Success Metrics: Feature adoption, DAU/WAU, engagement rate.

⏳ Timeframe: 4-6 weeks.

3. Personalisation & AI-Driven Recommendations

💡 What to test:

AI-driven content suggestions based on past behaviour.

Dynamic in-app homepage tailored to individual user preferences.

Smart search/autocomplete for faster navigation.

✅ Why: The more relevant the experience, the more users engage.

📊 Success Metrics: Session length, feature adoption, retention rate.

⏳ Timeframe: 6-8 weeks.

4. Monetisation Experiments: Maximising Revenue

Your goal: Increase average revenue per user (ARPU) without hurting retention.

1. Subscription Pricing & Upsell Optimisation

💡 What to test:

Different pricing tiers (monthly vs yearly vs lifetime).

Free trials vs freemium with gated features.

In-app upsell messaging timing and placement.

✅ Why: Small pricing changes can have a massive impact on revenue.

📊 Success Metrics: Subscription conversion rate, average revenue per user (ARPU), churn rate.

⏳ Timeframe: 6-8 weeks.

2. Paywall Experimentation

💡 What to test:

Soft vs hard paywalls (free features vs premium gating).

Timing of when a user sees a paywall (early vs later in journey).

Testing different copy and visuals for paywall messaging.

✅ Why: A poorly timed paywall kills conversion.

📊 Success Metrics: Paywall conversion rate, free-to-paid upgrade rate.

⏳ Timeframe: 4-6 weeks.

3. Dynamic Pricing & Discounts

💡 What to test:

Time-limited discount offers for hesitant users.

Tiered pricing based on usage (e.g., “pay-as-you-grow” models).

Location-based pricing for different markets.

✅ Why: Some users convert better with the right pricing strategy.

📊 Success Metrics: Conversion to paid, average revenue per user (ARPU), discount redemption rate.

⏳ Timeframe: 6-8 weeks.

4. Freemium to Paid Upsell Nudges

💡 What to test: Time-based trials, feature-gating strategies, in-app upsell messaging.

✅ Why: You need to convert free users at a higher rate without annoying them.

📊 Success Metrics: Free-to-paid conversion rate, paid churn rate.

⏳ Timeframe: 4-6 weeks.

5. Cart Abandonment & Checkout Optimisation

💡 What to test: Simplified checkout flow, one-click payments, abandoned cart email reminders.

✅ Why: Many users leave during payment—fixing this boosts revenue immediately.

📊 Success Metrics: Checkout completion rate, revenue per visitor.

⏳ Timeframe: 4-6 weeks.

5. Feature Expansion & Product Stickiness Experiments

Your goal: Increase feature adoption and extend product value.

1. Feature Adoption Nudges

💡 What to test:

Contextual tooltips and pop-ups introducing new features.

Limited-time exclusive features for engaged users.

In-app messaging about underused but high-value features.

✅ Why: Many users don’t explore beyond core features unless prompted.

📊 Success Metrics: Feature adoption rate, engagement per session.

⏳ Timeframe: 4-6 weeks.

2. Collaboration & Social Sharing Features

💡 What to test:

Real-time collaboration tools (if applicable).

Sharing progress or achievements on social media.

Inviting teammates or friends directly from the app.

✅ Why: Network effects drive organic growth.

📊 Success Metrics: Social shares, referral conversion rate, DAU growth.

⏳ Timeframe: 6-8 weeks.

6. Expansion Experiments

Your goal: Expand into new markets, user segments, and business models.

1. Internationalisation & Localisation

💡 What to test:

Localised content and language translations. Consider cultural differences.

Adjusted pricing for different geographies. Consider income and revenue differences.

Regional payment methods (e.g., Alipay, Paytm, M-Pesa).

✅ Why: Expanding to global markets opens new revenue streams.

📊 Success Metrics: New market adoption rate, localisation impact on conversion rates.

⏳ Timeframe: 8-12 weeks.

2. B2B Strategic Partnerships & Enterprise Offering

💡 What to test:

Team-based subscription models.

Customisation features for enterprise clients.

Bulk licensing or white-labeling options.

Corporate licensing deals.

✅ Why: B2B clients bring higher contract values.

📊 Success Metrics: Enterprise deal close rate, average contract value (ACV).

⏳ Timeframe: 12+ weeks.

At this stage, growth isn’t about launching random features—it’s about optimising what works.

Focus your in-product experiments on:

Reducing friction (better onboarding, feature discovery)

Increasing engagement (gamification, personalisation)

Maximising monetisation (paywall timing, subscription models)

Expanding reach (internationalisation, enterprise sales)

The best growth teams test constantly. The worst ones rely on opinions.

If you’re not experimenting, you’re not growing. Keep testing, keep learning, and scale what works.

4. Maturity Stage: Experiments to Maximise Retention, Revenue & Longevity

At the Maturity Stage, your product is established, you have a solid user base, and growth has slowed. The focus now shifts to sustaining engagement, reducing churn, increasing monetisation, and expanding into new opportunities.

Do You Even Have the Resources to Run Experiments in the Maturity Stage?

We’ve all been there. You’re managing a mature product, customers growth is flatlining and resources are drying up.

By the time a product reaches maturity, the focus shifts from fast iteration to sustained optimisation. Resources might not be as readily available as in earlier stages because:

Growth has stabilised—you may need to prioritise maintaining profitability over experimentation.

R&D budgets may be tighter, with less appetite for high-risk innovation.

Teams are often focused on operational efficiency rather than big bets.

Engineering is busy with maintenance, performance, and security rather than new experiments.

Can You Run Experiments during the Maturity Stage?

Yes, but you have to be strategic about where to invest resources.

1. Prioritise High-Impact, Low-Risk Experiments

Since resources are limited, focus on experiments that require minimal effort but drive revenue, retention, or efficiency gains.

Example: A/B testing tweaks to pricing pages, onboarding flows, or push notifications.

Example: Removing an underused feature to improve UX and reduce maintenance costs.

2. Leverage Data & Automation Instead of Large Teams

Data is abundant! Mature products often have more data than ever—use that to drive smarter experiments.

Example: AI-driven personalisation can be tested with minimal engineering work.

Example: Churn prediction models can be refined using existing data.

3. Small, Continuous Optimisation vs. Big Bang Changes

Instead of massive feature rollouts, focus on incremental improvements.

Example: Rather than launching a whole new feature, test small UX improvements that remove friction that causes churn or negatively impacts revenue.

Example: Automate manual processes (e.g., AI support chatbots) to free up resources elsewhere. Save costs and maximise the products profitability.

4. Use No-Code & Low-Code for Faster Testing

Not every experiment requires deep engineering resources.

Example: Landing page A/B tests can be run using no-code tools like Webflow or Unbounce.

Example: Email/push notification optimisations can be tested with marketing automation tools.

5. Align Experiments with Business Efficiency Goals

In the Maturity Stage,the aim is to maximise profitability of your product. Can it get a new lease of life with a new target market, geography, partner? Think profitability, efficiency, and longevity. Frame experiments in that context:

Does it increase revenue per user? (Upsell experiments, dynamic pricing)

Does it reduce churn? (Personalised retention campaigns, loyalty programs)

Does it improve efficiency? (AI-driven support, reducing feature bloat)

Here are the key experiments to run during this stage:

1. Retention & Engagement Experiments

Your goal: Keep users engaged and prevent churn.

1. Churn Prediction & Prevention Models

💡 What to test:

Use AI - Machine learning models to predict at-risk users.

Personalised re-engagement campaigns (push, email, SMS).

Special discounts or feature unlocks for at-risk users.

✅ Why: Retaining existing users is more cost-effective than acquiring new ones.

📊 Success Metrics: Churn rate, retention rate, reactivation rate.

⏳ Timeframe: 6-8 weeks.

2. Deep Personalisation & AI-Driven Engagement

💡 What to test:

AI-driven content and feature recommendations.

Predictive nudges based on user behaviour patterns.

Dynamic homepage/dashboard based on individual preferences.

✅ Why: A tailored experience keeps users engaged longer.

📊 Success Metrics: DAU (Daily Active Users), session length, feature adoption.

⏳ Timeframe: 6-8 weeks.

3. VIP/Loyalty Programs

💡 What to test:

Exclusive features or rewards for long-term users.

Tiered loyalty programs with increasing perks.

Gamified engagement rewards (e.g., exclusive badges, early access).

✅ Why: Recognising loyal users improves retention.

📊 Success Metrics: Retention rate, engagement rate, repeat purchase rate.

⏳ Timeframe: 8-12 weeks.

4. Social & Community Engagement

💡 What to test:

User forums or discussion boards.

Social sharing of product usage or achievements.

Community-driven events, challenges, or AMAs (Ask Me Anything).

✅ Why: Users who feel part of a community stay longer.

📊 Success Metrics: DAU, engagement rate, community activity levels.

⏳ Timeframe: 6-8 weeks.

2. Monetisation Optimisation Experiments

Your goal: Maximise revenue from your existing user base.

1. Pricing Optimisation & Tiered Plans

💡 What to test:

Tiered pricing models (e.g., Basic, Pro, Enterprise).

Dynamic pricing based on user engagement.

Bundled features vs à la carte add-ons.

✅ Why: Price changes can significantly impact revenue and retention.

📊 Success Metrics: ARPU (Average Revenue Per User), conversion to paid, churn rate.

⏳ Timeframe: 6-8 weeks.

2. Expanding Revenue Streams (New Monetisation Models)

💡 What to test:

Adding a marketplace or third-party integrations.

Offering premium support or consulting services.

Subscription add-ons (e.g., storage, AI tools, analytics).

✅ Why: Mature products need new revenue streams to stay competitive.

📊 Success Metrics: Revenue per user, LTV (Lifetime Value), conversion rate.

⏳ Timeframe: 8-12 weeks.

3. Win-Back & Reactivation Offers

💡 What to test:

Special pricing for inactive users.

Re-engagement emails with personalised content.

Limited-time feature unlocks to draw users back.

✅ Why: Churned users are easier to reactivate than cold prospects.

📊 Success Metrics: Reactivation rate, win-back revenue, churn reduction.

⏳ Timeframe: 6-8 weeks.

3. Product Expansion & Market Growth Experiments

Your goal: Find new opportunities for growth beyond the core product.

1. Feature Sunset & Retirement Testing

💡 What to test:

Identify underused features and measure impact if removed.

Offer users alternatives before removing features.

Measure user frustration vs efficiency gain from simplification.

✅ Why: Mature products can become bloated—simplification improves UX and may attract a new target customer profile.

📊 Success Metrics: Support ticket volume, feature adoption rate, NPS (Net Promoter Score).

⏳ Timeframe: 8-12 weeks.

2. International Expansion & Localisation

💡 What to test:

Launching in new regions with localised content.

Adjusting pricing for different markets.

Offering local payment methods.

✅ Why: New markets create new growth opportunities.

📊 Success Metrics: User growth in new markets, regional revenue, conversion rates.

⏳ Timeframe: 12+ weeks.

3. B2B & Enterprise Expansion

💡 What to test:

White-label or API integrations for businesses.

Enterprise-focused features like admin controls, security compliance.

High-touch sales model for large contracts.

✅ Why: Enterprise clients bring higher contract values and long-term revenue.

📊 Success Metrics: Enterprise deal close rate, revenue per account, retention rate.

⏳ Timeframe: 12+ weeks.

4. UX & Performance Optimisation Experiments

Your goal: Ensure the product remains seamless and scalable.

1. Speed & Performance Optimisation

💡 What to test:

Faster load times for key workflows.

Streamlining database queries and API calls.

Measuring bounce rates before and after optimisations.

✅ Why: Users abandon slow products.

📊 Success Metrics: Page load speed, app responsiveness, bounce rate.

⏳ Timeframe: 4-6 weeks.

2. Reducing User Friction (UX Research-Driven Changes)

💡 What to test:

Simplifying complex workflows.

Reducing the number of steps to complete key actions.

A/B testing different UI layouts.

✅ Why: Mature products often accumulate unnecessary complexity. Remove unnecessary bloat.

📊 Success Metrics: Task completion rate, user satisfaction, NPS score.

⏳ Timeframe: 6-8 weeks.

3. AI & Automation for Customer Support

💡 What to test:

AI-driven chatbots for common support queries.

Automated self-service help centers.

Predictive issue resolution before users contact support.

✅ Why: Reducing support burden improves scalability.

📊 Success Metrics: Support ticket volume, resolution time, CSAT (Customer Satisfaction Score).

⏳ Timeframe: 6-8 weeks.

5. Decline Stage: Experiments to Refresh, Sunsetting or Exit

When a product reaches the Decline Stage, resources are tight, growth has stalled, and leadership is deciding whether to revive, extract value, or sunset the product.

Experiments in this stage focus on three areas:

1️⃣ Refresh or Revitalise – Can we save the product by finding new demand or fixing existing problems?

2️⃣ Profit Optimisation – How do we make the most revenue with the least investment?

3️⃣ Sunset or Exit – How do we phase out the product with minimal disruption and loss?

1. Refresh or Revitalise Experiments (Can We Save This Product?)

If there’s potential to turn things around, experiments should focus on finding new demand, simplifying the product, or pivoting.

1. Target a New Audience or Market

💡 What to test:

Running localised versions of the product in new regions.

Repositioning the product for a different demographic (e.g., shifting from consumers to businesses).

Testing industry-specific use cases (e.g., rebranding a failing consumer app as an enterprise tool).

📊 Success Metrics: Growth in new sign-ups, increased engagement in target segments.

⏳ Timeframe: 6-12 weeks.

2. Feature Simplification & Streamlining

💡 What to test:

Identifying underused features and removing them to simplify the product.

Reducing friction in key workflows to improve usability.

Launching a "lite" version with only essential features.

📊 Success Metrics: Increased retention, reduced support tickets, improved UX feedback.

⏳ Timeframe: 4-8 weeks.

3. Pivot Experiments

💡 What to test:

Adjusting the product for a new problem space or use case.

Adding features that align with an emerging market need.

Partnering with a larger company to integrate the product into their ecosystem.

📊 Success Metrics: Adoption in new use cases, revenue growth in alternative markets.

⏳ Timeframe: 8-12 weeks.

When to stop: If no new audience, feature, or positioning creates traction, revitalisation is not viable.

2. Profit Optimisation Experiments (Maximising Revenue Before Sunset)

If decline is inevitable, experiments should focus on increasing revenue per user, reduce costs, and extend profitability.

1. Price Optimisation & Upsell Experiments

💡 What to test:

Increasing subscription fees to see if existing users will pay more.

Offering one-time purchases instead of recurring payments.

Creating a premium or exclusive plan for power users.

📊 Success Metrics: ARPU (Average Revenue Per User), conversion to paid.

⏳ Timeframe: 6-8 weeks.

2. Tiered Feature Gating & Paywalls

💡 What to test:

Moving previously free features behind a paywall.

Offering paid-only support (priority service for premium users).

Testing time-limited free trials instead of full freemium access.

📊 Success Metrics: Subscription conversion rate, churn impact.

⏳ Timeframe: 4-8 weeks.

3. Automating Support & Reducing Costs

💡 What to test:

AI-powered customer support to reduce manual support costs.

Self-service help center instead of live support.

Outsourcing support functions to a lower-cost provider.

📊 Success Metrics: Lower support costs, CSAT (Customer Satisfaction Score).

⏳ Timeframe: 4-6 weeks.

When to stop: If price changes drive mass churn, or cost reductions impact usability, the product is not sustainable.

3. Sunset or Exit Experiments (Minimising Disruption & Loss)

If shutdown is inevitable, experiments should ensure a smooth transition and extract remaining value.

1. Migration & Exit Strategy Testing

💡 What to test:

Offering a migration path to another product (internal or external). I look at this as a reverse go to market plan with activities and communication that supports a seamless handover.

Partnering with competitors to transition users to their platform.

Selling the user base to a strategic acquirer.

📊 Success Metrics: % of users successfully migrated, revenue from partnerships.

⏳ Timeframe: 8-12 weeks.

2. End-of-Life Monetisation

💡 What to test:

Running a "last chance" lifetime subscription sale.

Selling access to premium features before shutting down.

Offering export tools as a paid service for users transitioning away.

📊 Success Metrics: Revenue from final-phase monetisation.

⏳ Timeframe: 4-8 weeks.

3. Controlled Sunset Messaging

💡 What to test:

Gradual communication instead of an abrupt shutdown notice.

Offering loyalty discounts for users switching to another company product.

Keeping a minimal maintenance team to support legacy users.

📊 Success Metrics: Customer sentiment, PR impact, brand perception post-shutdown.

⏳ Timeframe: 12+ weeks.

When to stop: If migration is unsuccessful, minimise customer frustration instead of revenue extraction.

In this final Decline stage of the Product Lifecycle, you’re not trying to scale—you’re testing how to either save, optimise, or exit.

Key focus areas for decline-stage experiments:

1️⃣ Can we save it? → Test new markets, simplification, pivots.

2️⃣ Can we squeeze value from it? → Test pricing, paywalls, automation.

3️⃣ Can we exit smartly? → Test migrations, shutdown strategies, last-phase monetisation.

Even in decline, data should guide the strategy—because guessing your way through this stage is the worst option. Over the years, you’ve built up a lot of trust with these customers. Focus on the customer experience and seek ways to transition them to a new product that makes sense.

What about Surveys and Interviews after the Pre Introduction stage of the Product Lifecycle?

Before a product is introduced, you’re reliant on what people will tell you. However, what they say they want or why, doesn’t always correlate with what they actually do. Hence the data we collect from real customer usage and behaviour is invaluable within experiments and you’ll find experiments conducted after launch or the introduction stage are heavily skewed towards quantitative research experiments over qualitative data (surveys and interviews).

However, seeing what people do in the product doesn’t tell you why.

Introduction Stage (Finding Product-Market Fit)

At this stage, you’re still learning about your customers and whether the product solves their problem. Surveys and interviews help refine positioning and uncover early adoption barriers.

Use surveys for:

✔️ Understanding customer pain points.

✔️ Identifying target demographics.

✔️ Testing early messaging and positioning.

Use interviews for:

✔️ Observing real user behaviour and workarounds.

✔️ Spotting friction in onboarding.

✔️ Identifying what early adopters love/hate.

Growth Stage (Optimising for Scale & Retention)

Now that the product has traction, surveys and interviews help identify roadblocks, improve engagement, and increase retention.

Use surveys for:

✔️ Identifying high-friction areas.

✔️ Spotting feature adoption gaps.

Use interviews for:

✔️ Deep dive into user behaviour (who stays, who churns, why).

✔️ Identifying power users’ habits.

✔️ Finding where to double down on growth strategies.

Maturity Stage (Maximising Retention & Revenue)

The goal here is to reduce churn, improve monetisation, and keep the product competitive. Surveys and interviews help you spot declining engagement signals before it’s too late.

Use surveys for:

✔️ Detecting early churn risks.

✔️ Understanding pricing sensitivity.

✔️ Identifying which features customers actually value.

Use interviews for:

✔️ Discovering upsell & cross-sell opportunities.

✔️ Understanding what drives loyalty.

✔️ Spotting early signs of market fatigue.

Decline Stage (Deciding Whether to Refresh, Optimise, Sunset or Exit)

Surveys and interviews help determine if there’s still a future for the product or if it’s time to sunset it.

Use surveys for:

✔️ Evaluating whether customers still care.

✔️ Identifying which user segments might still engage.

✔️ Measuring demand for a potential pivot.

Use interviews for:

✔️ Learning if a product pivot is viable.

✔️ Finding monetisation opportunities before shutdown.

✔️ Gathering feedback to preserve brand reputation.

In my career I’ve managed products at every stage of the product lifecycle.

Experiments aren’t just a growth tactic—they’re how great products stay relevant, valuable, and competitive at every stage of the product lifecycle.

Valuable products don’t happen by accident. They evolve through continuous learning, iteration, and testing. Whether you're launching a new feature, looking to increase acquisition, reduce churn or optimise revenue experimentation is your most powerful tool for making smart, product decisions.

The question isn’t whether to run experiments—it’s whether you can afford not to.

Experiments in product management are essential for making data-driven decisions, mitigating risk, and ensuring product success. By using the right experiment at the right stage, teams can unlock growth, improve user experience, and stay competitive in an evolving market.

Ready to Understand Your Customers on a Deeper Level?

If you’re ready to uncover the most valuable jobs-to-be-done for your customers and unlock growth opportunities, I’d love to help. With customer discovery interviews and the Wheel of Progress, we can align your product or service with what your customers truly need—creating a pathway for long-term success.

Let’s talk! Email me at irene@phronesisadvisory.com and let’s explore how we can harness customer insights to drive your business forward.

Are you new to Product Management and want to learn from me?

I created a Course. For people new to Product Management.

Aligned it with the Learning Outcomes created by Product greats like Jeff Patton and others. Had it certified by the globally recognised ICAgile.

Choose to spend 2 days learning from me - either face to face or via Zoom - with ICAgile Certified Professional in Product Management (ICP-PDM).

And if you’re looking for a sneaky discount, send me an email at irene@phronesisadvisory.com